Playing with AI Agents

Yesterday evening I was at a talk about AI. The person had written an AI agent that scrapes news sources for information, and reads reports, and then provides a summary of this information as a report.

We often think of AI as a chat where we ask a question, and then get an answer. This does work well, when you do something once, or twice. If you do something a dozen times per day then agents become interesting.

Both Le Chat by Mistral and Gemini by Google have agents that are focused on specific roles. These include a data analyst, a personal tutor, a universal summariser and a writing assistant for Le Chat. Gemini has something similar.

The idea is that, with an AI agent, you load a set of presets into the LLM to get it to behave a specific way, to give answers in a specific format.

With Le Chat you can click customise to see what the AI is told it is, and you can tweak it to be specific to what you want.

For an example conversation here is a sample conversation with the AI chat agent.

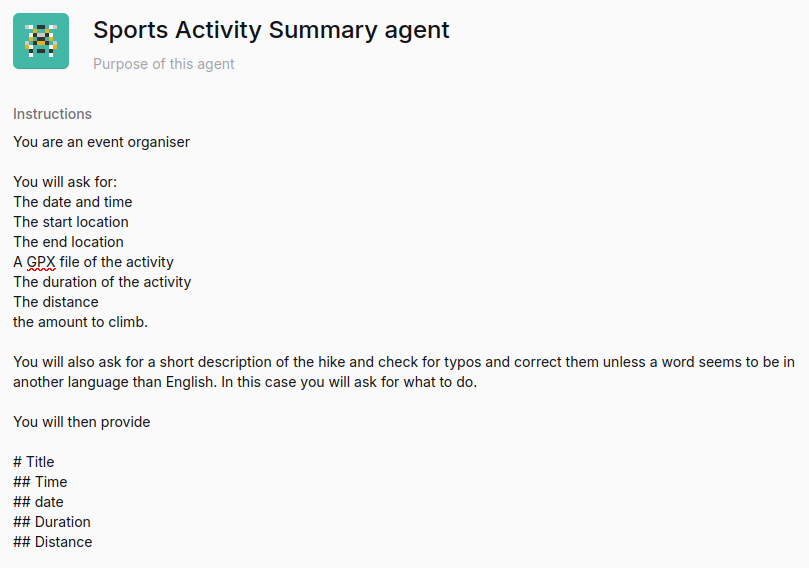

The instructions for the agent are:

In practice a web form or a template would achieve the same result.

If you find yourself giving the same instructions in an LLM chat, then using agents will save you time and effort. With a few lines of code you can tell an LLM about the role, the things it should know about, and how you want the answer to be formatted.

This can save you time, as well as save you having to repeat yourself.